Hub and Spoke network topology in Azure

Everything you need to know to implement this design.

Back in late 2016 I was lucky enough to go Microsoft Ignite in Atlanta (USA), which was a bit of a big deal as it was my first major conference. One of the take aways from the various technical sessions on Azure networking I attended was that Microsoft wanted to put a great deal of emphasis on the usage of a Hub and Spoke network topology. I even blogged about it as I also thought this was a great option given the new functionality and services around Azure networking that was made available around the time.

Since then I’ve worked with a number of customers on this topology. I’ve seen different requirements and use cases, as well as different approaches to routing and security. What’s been consistent though, has been the idea of adopting a “shared services” approach to infrastructure in Azure to reduce complexity, reduce duplicate resources and therefore reduce costs. Microsoft even recommend that as part of the “best practices”1 around Hub and Spoke in Azure.

Now the cloud is not cheap and I don’t think the intention was ever for it to be cheap. There is immense value though, and amazing functionality that is growing at an increasingly rapid rate. So, before this all turns into yesterdays news (even though this is a little late), I thought I’d finally extract everything I’ve run into along the Hub and Spoke design and implementation journey here and output that in the form of this blog post.

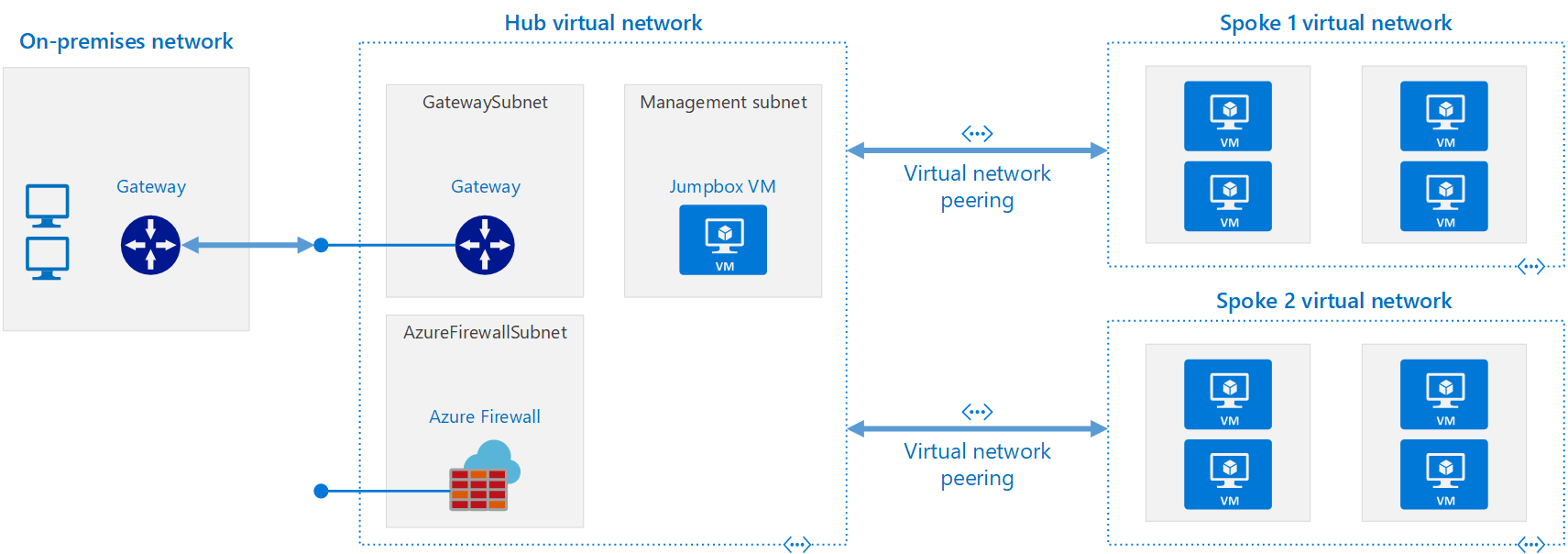

Before I begin, here’s a quick snapshot of what Microsoft documentation2 outlines as an example Hub and Spoke network topology in Azure can look like:

While this is a sound solution, I do have some opposing thoughts to this; particularly around the Hub and reducing complexity within that VNet. For the most part, I like to avoid complexity where complexity is there for complexity’s sake. A great design can also be a simple design. Networking in Azure (or the cloud in general) while simpler than in traditional, physical networks, is anything by simple. When implementing a Hub and Spoke solution, here’s some key considerations to take into account:

Hub

Generally the Hub is where you would want to deploy core services and where you’d extend Azure networks to external networks; be that on-premises via ExpressRoute or a VPN of some kind, or to the greater internet (using Azure internet connectivity).

- Options

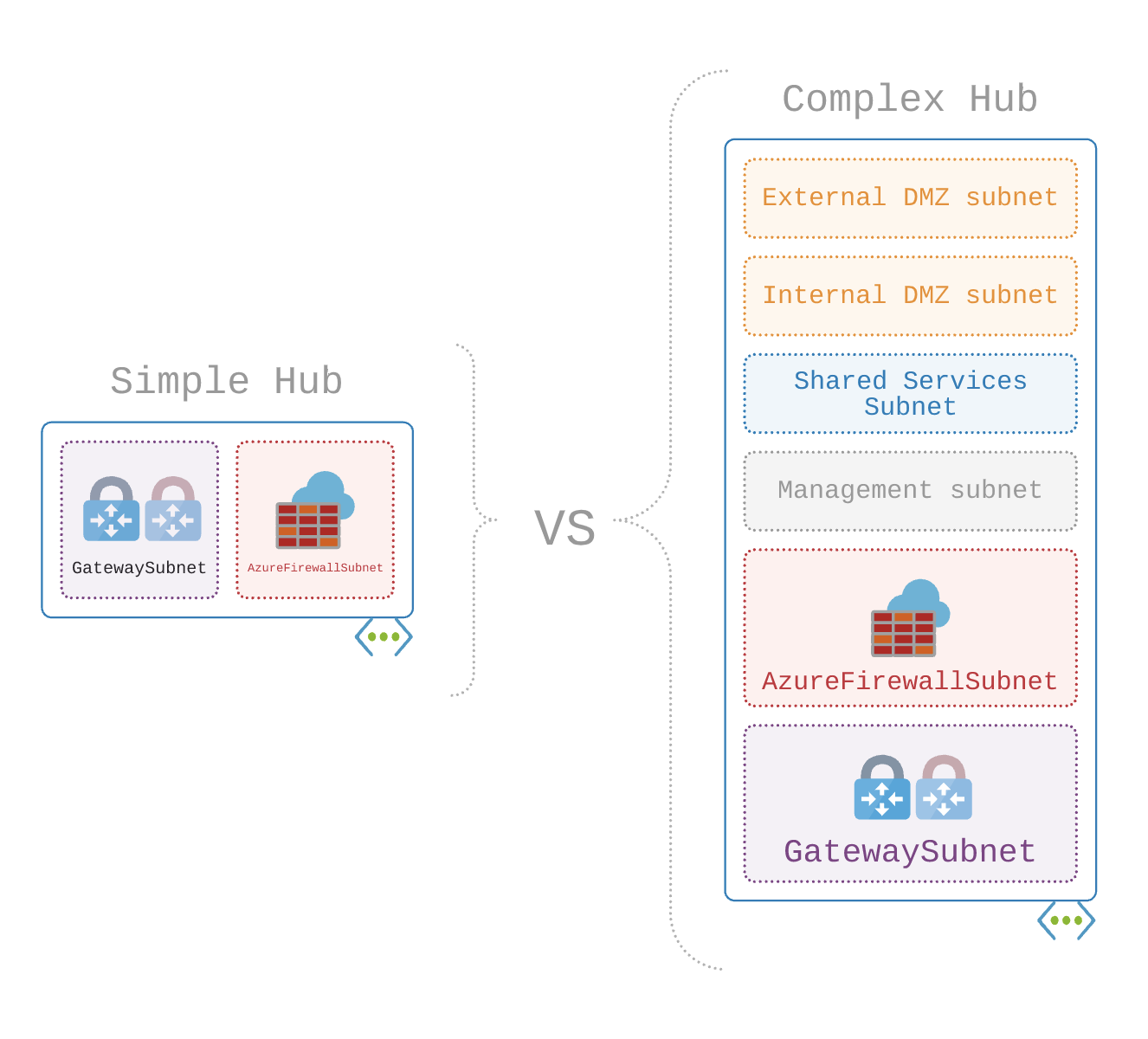

I think there’s two schools of thought when it comes to the Hub. You either centralise a bunch of logical network segments or zones to “reduce complexity and costs (ingress and egress VNet traffic)” or you keep the Hub rather lean to a select set of services.

The first approach is what’s recommended in Microsoft Best Practices documentation3 and can work for most organisations. Going back to the idea of reducing complexity, this solution I find can be counter to that point. While it seems easy to create a bunch of subnets within that Hub VNet, the real world reality can be difficult depending on downstream design decisions.

The impacts are that of increased complexity in RouteTables or User Defined Routes (UDR) and an increase in security control rule sets within the centralised security/routing solution; Azure Firewall or Network Virtual Appliance (NVA). Thats because of a default System RouteTable rule that allows intra VNet communication out of the box. It’s when you need to override that with custom RouteTables/UDRs that the complexity comes in.

Let’s take another approach. If we consider that the Hub VNet is more of a ‘transitive service’ with only a core set of centralised functionality. Reducing the amount of subnets and deployed services can streamline security controls and static routes. This then even opens up the possibility of deploying more infrastructure in Azure as we define logical zones as being more aligned to VNets, rather than subnets within VNets (more on logical zones later).

- Transit Hubs

It’s important to note that VNets are none transitive. If you have 3 VNets (as outlined in the example below) you can’t route from VNet 1 to VNet 3 via VNet 2. Azure just doesn’t work that way. Whats confusing though is that you can have a transitive Hub (sort of)4.

If we deploy a Gateway in the Hub, traffic from Spokes to the Gateway can traverse the Hub via VNet Peering configuration (‘use remote gateway’ and ‘allow gateway transit’ settings respectively). This effectively makes the Hub transitive. Now, Spoke to Spoke network connectivity can then be enabled if Azure Firewall (or an NVA) is deployed in the Hub. Again, that kind of makes the Hub transitive. Confused yet?

- Multiple Hubs

You can also provision multiple Hubs5 in either the same Azure region or across different regions. Hubs can also connect to other Hubs across multiple connectivity methods:

- VNet Peering: connect a pair of Hubs within the same region using VNet Peering

- VNet Global Peering: connect a pair of hubs in different regions using VNet Global Peering

- ExpressRoute: connect two Hubs with VNet ExpressRoute Gateway’s to ExpressRoute and use ER to route between the two Hubs

- VPN: configure a VPN using Network Virtual Appliances (NVA) to connect two VNets (probably the least efficient way to achieve connectivity)

Virtual WAN

This service 6 (now GA as of late 2019) ‘provides optimised and automated branch connectivity’ to Azure. Combining a number of services and scaling them out for a considerably higher throughput and capacity, Virtual WAN takes WAN connectivity and a Hub and Spoke approach to the Nth degree. The Hub VNet deployed is Microsoft managed, so there are limitations on what can be provisioned. Such trivial things like changes to address space, subnets etc are fairly locked down. While it’s an evolving service, the ‘locked in’ nature can be a good or bad thing, depending on requirements. Something to consider to streamline any Hub and Spoke deployments.

Logical zones

If you come from a traditional network background you would be familiar with the concept of zones and defining boundaries between network segments. Frameworks like ISO 27001, NIST, COBIT etc help with establishing information security controls in organisations. These can be used in networks by defining logical zones, labelling them, defining common workloads and defining the intra and inter connectivity.

Some common examples are a Untrusted, Semi-Trusted or DMZ, Trusted or Controlled, Restricted and Secured. Whatever your framework alignment or usage of logical zones in Azure, some lessons learned from my experiences are as follows:

- Logical zone containers- where is the boundary for any given zone? Are we talking VNets, subnets or something more granular.

- My preference is to use VNets as containers to reduce routing complexity (RouteTables/UDRs)

- Using subnets as the container leads to lots of routes added to RouteTables

- This is where the Best Practices recommended by Microsoft vs my opinion differ

- The common issue is around the Hub VNet and hosting multiple zones within the hub and using subnets as containers

- When you introduce a security controls (for example Azure Firewall or an NVA) that complexity within the Hub can snow ball and Ops teams can be bogged down with any changes

- Using VNets as containers has some draw backs- namely inter VNet routing and data ingress and egress charges that need to be considered with deisgns as well

- Definition and design is very important- you can’t just throw a few VNets and call them different names and expect to comply with any framework or standard

- The better the effort in setting up reference architectures up front, the better the downstream effort

- Setting enough zones vs creating too many zones

- While aligning to frameworks is great as a lot of the though around definition and execution is already done, sometimes frameworks need to cater for solutions to the Nth degree

- That overly complex framework might not apply to the relevant environment and you’ll end up over engineering a solution

Spokes

With a Hub provisioned as a VNet, naturally Spokes are other VNets that are connected to the Hub via either VNet Peering or VNet Global Peering. The key benefit of a Hub and Spoke topology is leveraging VNet Peering. When doing so, you can peer up to (as of March 2020) 500 VNets to a Hub VNet7. So scale and growth can easily be accommodated with a Hub and Spoke solution.

Spokes come down to several means of implementation:

- Per logical zone: this is a nice big container with less VNets and likely larger VNets (by address space)

- Per business unit or teams: a little more granular and a means to contain workloads by business units or teams that design, built and maintain those workloads

- Per workload: as granular as you can get really. Every workload or app has its own VNet, which at scale, can be a little tricky to manage. Any other implementation is just a variance of the above options.

VNet Peering

VNet Peering is the core of what makes a Hub and Spoke network topology work (I think I mentioned that before in this blog post). It’s the means to join two VNets to combine the address space and effectively make one network segment. VNet Peering spans any VNets in the same region, even across subscriptions which is handy if we want to separate out workloads should resource limitations be a factor when deploying.

VNet Global Peering

Extending VNet Peering a step further and across geo locations: VNet Global Peering offers the same functionality as VNet Peering but, you guessed it, across Azure Regions. You can even peer VNets from one side of the globe to the other which is pretty amazing. Now, the latency won’t spectacular, but traffic will traverse Microsofts backbone and not the public internet.

Connecting Hub VNets via VNet Global Peering can reduce the constraints of ExpressRoute components. ExpressRoute and the Hub VNet ER Gateway have to be set to specific speed SKU’s when provisioning. That means those SKU’s limit performance. VNet Global Peering between Hubs can get around performance limitations and leverage Azure Network Fabric backbone which has speeds in the many GB’s per second as standard.

Three important considerations around the use of VNet Global Peering are:

- Routing: VNet Global Peering routing will take precedence over ExpressRoute as the two connected VNets become one address space, effectively one network segment.

- Traffic charges: directly connected VNets to ExpressRoute (if the correct SKU) is used, can have unlimited network traffic (ingress and egress). With Global VNet Peering, ingress and egress charges will apply.

- Connected VNets use of Gateways: only one Gateway can be used by a given VNet. Any attempt to route over opposing region Gateways would need to traverse Azure Firewall or equivalent NVA.

Intra VNet routing

The general vibe I’ve taken from security teams is that they would prefer to have every packet inspected to the N’th degree to check that nothing nefarious is happening across an organisations network. Gross over exaggeration and containerising all security folk, but its a reoccurring theme I’ve been a whiteness too.

This is where differences of opinion and more precisely differences in requirements come about. Commonly a centralised firewall is deployed in the Hub to facilitate routing between logical zones, but also to do coarse grain security controls or additionally do fine grain security controls as well. My preference is to use native cloud tooling- namely Azure Firewall (and also NSGs to some degree). Theres a host of pros and cons for that versus a bespoke Network Virtual Appliance from an established security vendor

So while I like to leverage Azure Firewall within the Hub, inspecting every packet I think is overkill. Again, that’s going to rustle some feathers. So intra VNet routing generally is something that I’m comfortable recommending to customers to do. Different logical zones (more on that later) might dictate that can’t happen, but for the most part it can be an important consideration.

To allow or deny that intra VNet traffic, Azure RouteTables/UDRs may or may not be required. If you allow intra VNet routing between whatever subnet constructs and peered VNets, there are built in System RouteTables to facilitate this. It’s only when you may want to manipulate this and force traffic via the Hub and centralised security controls that this can be blocked. This is done via a simple RouteTable/UDR entry for a given address space destination- send traffic to Azure Firewall. Easy. Well, not so fast.

Going back to my point around logical zones and hosting multiple logical zones within a Hub, intra VNet routing can get rather complicated rather quickly. I like to avoid complexity where possible, so this goes back to the suggestion around keeping logical zones at the VNet level where possible.

Inter VNet routing

This is achievable through the use of Azure Firewall (or NVA) within the Hub. Thats the simplest way that avoids overly complicated solutions. By that, I mean Spoke to Spoke routing directly can also be achieved via VNet Peering between these two spokes. I don’t like to use to recommend this unless is a bespoke scenario. Again, it comes down to overly complicated routing and RouteTable management that when avoidable- avoid!

RouteTables galore!

RouteTables or User Defined Routes (UDR) are going to be used quite a lot in the Hub and Spoke model. By default, when VNet Peering is configured between two VNets (the Hub and any given single spoke) the address space between those two VNets are joined under a single address space. RouteTables are needed to manually (via static routes) direct traffic to Azure Firewall or an NVA to allow/route/deny/block traffic between Hub to Spoke and Spoke to Hub network connectivity.

Moreover, VNets are non transitive, meaning you cannot have Spoke A exchange traffic with Spoke C via the Hub natively. Azure Firewall or an NVA is needed to facilitate this functionality.

RouteTables and “Disable BGP route propagation”

Further expanding on the usage of RouteTables; when ExpressRoute or equivalent is involved, RouteTables are able to have learned routes propagate, for example from the Hub (naturally where ExpressRoute would be provisioned) to Spokes. This can be problematic if you are wanting to direct all traffic via Azure Firewall or NVA equivalent in the Hub. Thats because Azure RouteTables work by sending traffic to the most specific route.8 So with BGP propagation Enabled, a Spoke VNet (with the appropriate additional Peering configuration) could bypass Azure Firewall and route directly via the VNet ER Gateway in the Hub to on-premises networks.

Using or note using remote gateways

This is an optional configuration item in VNet Peering depending on whether or not the Hub has Azure Firewall (or an NVA) deployed or you simply have configured the Hub to be transitive. When using Azure Firewall (or NVA) this isn’t necessary to allow the ‘use remote gateway configuration’ as Azure Firewall would be proxying the traffic from the Spoke to ExpressRoute or equivalent service tied to the Hub VNet gateway. If you enable use remote gateway functionality, Azure Firewall could be bypassed and traffic go directly to the gateway which may break any security requirements that may be mandated.

Furthermore, in a scenario with a Spoke connected to multiple Hubs, you can only use one remote Gateway for the Spoke9. I dare say that the first VNet peering configuration would take effect, so any additional Peering (secondary Hubs) to that Spoke would just result in nothing happening, defaulting to the first peering configuration.

Azure Bastion

If you’re planning on using Azure Bastion to administer VM resources, this is a contentious topic as I’ve stated earlier. In terms of Hub and Spoke be aware that currently (as of March 2020) Azure Bastion can only be deployed on a per VNet basis with no means to extend remote connectivity outside of that container. For use with Hub and Spoke, you’ll need to deploy Azure Bastion in the Hub and each Spoke with VM workloads to use it for secure, browser based access.

NSGs and ASGs

I’ve said it before and i’ll say it again- NSGs are pretty simple, but effective and for what they are, I think they’re rather good. In a Hub and Spoke topology, usage should be limited to a single scenario only: fine grained security controlled on Network Interfaces (NIC) only. In the Hub, Azure Firewall or equivalent NVA should be providing either both coarse and fine grain controls, or simply coarse grain controls.

Therefore, subnet level NSGs are really not necessary. So the only use case if for NIC based assignment. Word of warning though; the more VM instances you have deployed, the more unique NSGs to be managed. However, this is where ASGs can come into play to reduce some of that complexity by using a naming context rather than a IP address context for NSG rules. It’s not perfect and won’t reduce the number of NIC NSGS, but can help with Ops.

Address space

Theres a total of 17,891,328 private IP’s available or organisations when deploying a network if you leverage only the RFC1918 private reserved ranges (10.0.0.0 /8, 172.16.0.0 /12 and 192.168.0.0 /16). If you divide that into /16 ranges (65536 IP blocks), thats only 273 /16 subnets available. In larger organisations where the network is very flat and mostly the 10.0.0.0 /8 range is used everywhere and being able to get a large uniform block like a /16 for use in the cloud can be a challenge.

The main point I want to make around address space is keeping consistency around usage to simplify routing (via RouteTables/UDRs, Azure Firewall rules and NSG rules). Nice consistent ranges assigned to Hub and Spokes means you can summarise subnets and make more coarse grain rules.

- Address space gotcha

Ive worked with customers that don’t want to ‘waste address space’ by preallocating larger subnets to VNets (Spokes). The preference is to assign smaller network ranges to VNets and consume the address space as required. My preference is to not have to worry about address space and not micro manage it. This works in isolated VNet scenarios or VNets that are connected to a VPN or ExpressRoute circuit.

When dealing with VNet Peering or VNet Global Peering, incremental additions to the address space of VNets gets difficult. VNet Peering and VNet Global Peering (as of March 2020) do not provide dynamic updates to peered configuration once a VNet address space is extended. In other words, if you have two VNets that are peered and one VNet needs an address space extended, the peering needs to be deleted, address space extended, then peering re-established. In a Hub and Spoke scenario this can get complicated especially in production environments.

SLAs and availability

- Azure Firewall vs NVAs

With every security solution in Azure, be it several clustered NVAs deployed or the architecture behind Azure Firewall, basically a ‘load balancer sandwich’ is used 10. Most are not really highly available and are stateless. Azure Firewall (this isn’t a plug by the way) is highly available and stateful. The choice is more around how much availability and SLA do you want. You can go with the standard option of 99.95% 11 with a single Azure Availability Zone, or leverage two or more (three maximum) and get 99.99% SLA- woohoo!

Now thats not to say NVAs are not good enough, or don’t get as good SLA. You can get 99.95% SLA, but each vendor does state differently and not all of them work that great.

- ExpressRoute Bowtie

Most organisations that would consider a Hub and Spoke topology would also consider risk when designing solutions to be an important factor in design decisions. Reducing risk can be achieved with the deployment of Azure resources across multiple regions. In Australia, our two main regions are Australia East (Sydney) and Australia Southeast (Melbourne). Most organisations would then deploy workloads (in a Hub and Spoke topology) across both regions.

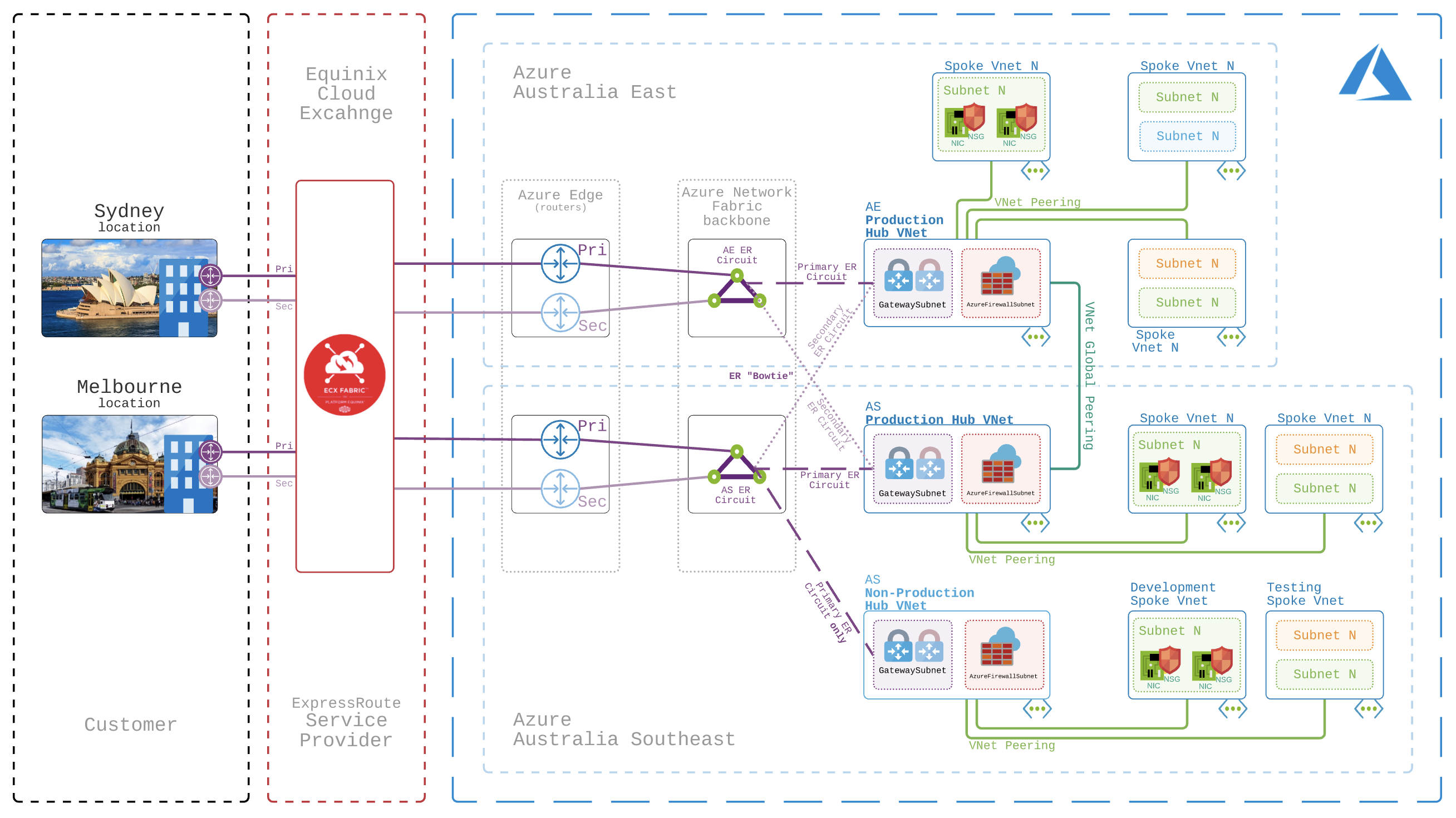

When we take ExpressRoute into consideration after provisioning in both regions, failover and redundancy strategies are used to again reduce risk. An ExpressRoute “Bowtie” 12 design can be used to route between regions should the local ExpressRoute circuit go offline. A Hub VNet is then connected to two ExpressRoute circuits and weighting is used to mandate that the local ER circuit is used over its partner region unless local is offline. This can be represented via the following diagram:

Additionally it’s important to also consider that in some cases ExpressRoute Premium upgrade is required to facilitate the “Bowtie” design, i.e. connecting a VNet to ExpressRoute in another Azure Region. Within Australia though, Australia East and Southeast regions are within the same “geopolitical” region, which means that ER Premium is not required for cross region VNet to ER connectivity- woohoo!

- Hub VNet Gateway

Every Virtual Network Gateway that is deployed to a VNet consists of two instances that are provisioned out of the box in an Active/Passive state 13. There is an available option to also configure them as Active/Active as well. Microsoft does this to allow for planned and unplanned service disruption.

It’s important to note that with the Active/Passive implementation, any change either planned or unplanned will result in an outage. That timeframe can be as little as 10 seconds (thats little?) for a planned outage or 1-2min for an unplanned outage (Whaaaaatttt?????).

On the other hand, there is Active/Active. Depending on the customer side configuration (whether there is a single device or two devices) you can end up with a “Bowtie” or full mesh configuration. This is the most robust and in the event of failure of connection to one gateway, there is an automated process to divert traffic to the alternate device. There’s still a disruption, but its very minor, within the milliseconds rather than seconds range (so I’ve been told).

So what could a real world Hub and Spoke design look like?

With all the above considerations, I’ve put together the following diagram as an overall solution. Even though it’s rather high level, it’s as comprehensive as possible to cover off a bunch of the key factors that can go into a Hub and Spoke design in a single image.

For most designs, a Hub and Spoke is deployed to better utilise connectivity to on-premises networks (ExpressRoute or VPN) and consolidate resources to avoid duplication. In this example, ExpressRoute takes centre stage to maximise connectivity to various Spoke VNets across Australia East and Southeast regions. VNet Global Peering is used to connect the production Hubs, bypassing ExpressRoute as Australia East and Southeast regions are considered to be in the same geopolitical area, meaning no Premium upgrade required to leverage that functionality. Azure Firewall is used as the central means to allow/deny traffic between network segments, but boosted with a defence in depth approach to security with Network Security Groups (NSG) coupled with Application Security Groups (ASG) applied to Virtual Machine instances Network Interfaces (NIC). Everything else is pretty standard stuff.

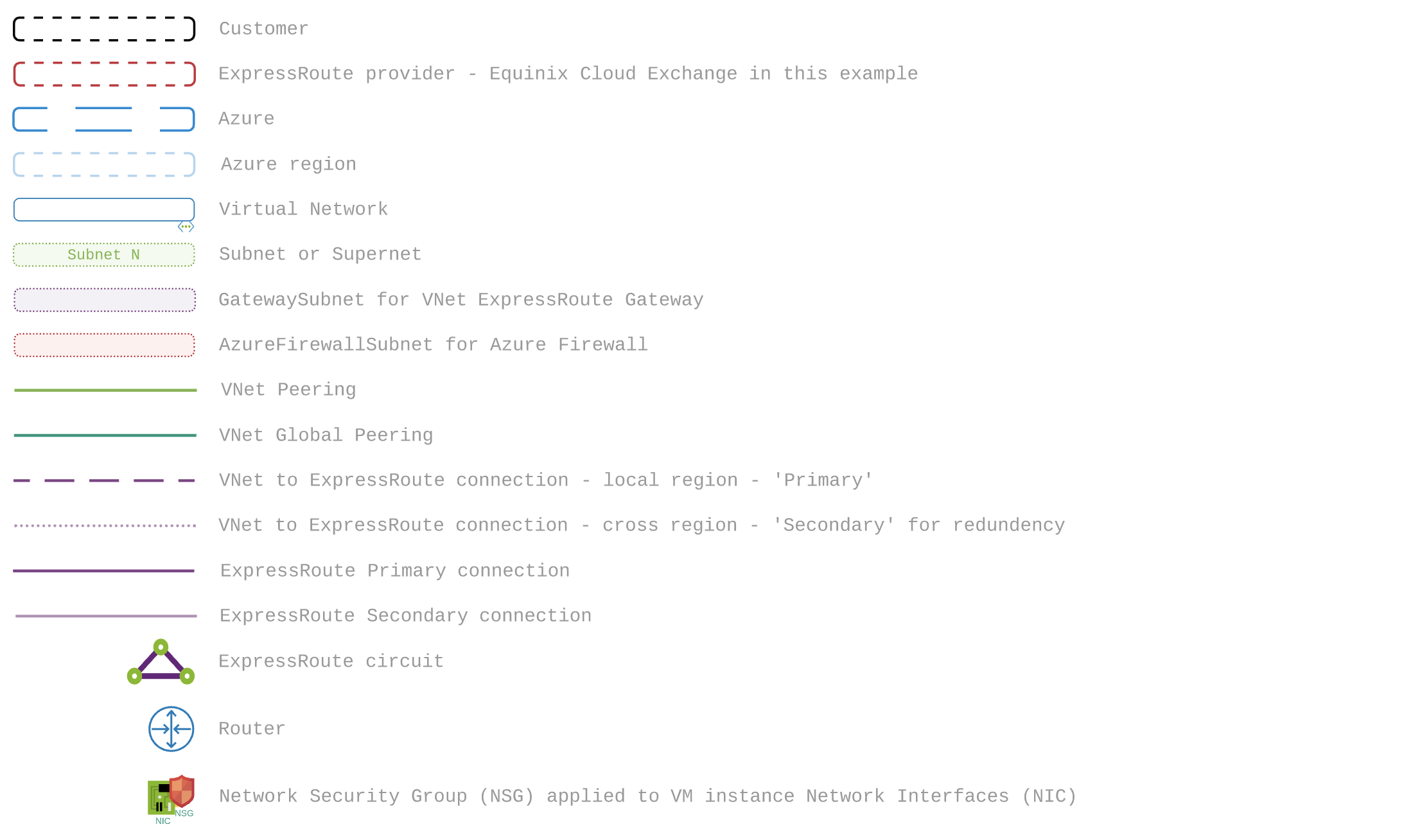

This diagram has a key of:

Summary

As you can see, something as ‘simple’ as a Hub and Spoke network topology in the cloud, in this case Azure, has many considerations, dependencies and constraints that makes defining the core requirements really important as those can pivot the design in a number of different directions.

This has been a rather long post and tricky to outline in a top to bottom uniform way. Theres too many overlapping nuances and the target is always moving with new services and new functionality coming out all the time leaving most blogs and documentation obsolete within a year, or sometimes sooner. I hope I’ve done enough to outline the key points for consideration with any design. Enjoy!

-

Best practice: Implement a hub and spoke network topology ↩︎

-

Best practice: Implement a hub and spoke network topology ↩︎

-

“Each virtual network can have only one VPN gateway” - What is VPN Gateway? ↩︎

-

Highly Available Cross-Premises and VNet-to-VNet Connectivity ↩︎